In this post, we will learn how to create a face recognition system using Python, along with a fully-fledged demo site using Flask, HTML, CSS, and JavaScript.

This project will help you understand how a face recognition system works. Also, if you are a college student, you should definitely consider this project for your internship, as it will add extra value to your resume.

Now, let’s set up our project.

Folder structure

FACE-RECOGNITION/

│── static/

│ ├── styles.css

│── templates/

│ ├── index.html

│ ├── register.html

│ ├── verify.html

│── app.py

│── requirements.txtThis is our folder structure. Now, before we jump into writing our core logic in app.py, let’s first install our project dependencies listed in the requirements.txt file.

Install Project Dependencies

Before installing the dependencies for our face recognition system, we need to create a virtual environment to keep our project isolated. You can either set up a virtual environment using Python or Miniconda, but I highly recommend using Miniconda for a smoother setup.

- If Miniconda is not installed on your system, you can download it from its official site. There are just 2-3 commands to install Miniconda on your system. (Click here to see the official installation guide.)

For Windows, use the following commands to install Miniconda. If you are using macOS or Linux, please follow the link above to install Miniconda on your system.

wget "https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe" -outfile ".\miniconda.exe"

Start-Process -FilePath ".\miniconda.exe" -ArgumentList "/S" -Wait

del .\miniconda.exeAfter installing Miniconda, let’s create our virtual environment using Miniconda and then install the dependencies from requirements.txt.

Create a Virtual Environment for Our Project

Step-1: Use the below command to create a virtual environment.

conda create -n face-recognition python=3.10 -yStep-2: Use the below command to activate your virtual environment.

conda activate face-recognitionInstall requirements.txt

After activating our virtual environment, below is our requirements.txt file. Add this file at the same level as app.py.

Flask

opencv-python

cmake

dlib

face-recognition

numpyAnd then, run this command to install all dependencies mentioned in our requirements.txt file.

pip install -r requirements.txtIf you get any issues while installing dlib follow this steps

Run the following commands one by one:

conda install -c conda-forge cmake

conda install -c conda-forge dlibThen try:

pip install -r requirements.txtThis will install dlib along with the remaining dependencies required for your face recognition system.

Now, let’s create our Flask app for the face recognition system.

app.py

import os

import json

import base64

import cv2

from flask import Flask, render_template, request, jsonify, redirect, url_for

import face_recognition

import numpy as np

from datetime import datetime

app = Flask(__name__)

# Ensure required directories exist

os.makedirs('static/images', exist_ok=True)

# Path to store user data

USER_DATA_FILE = 'user_data.json'

# Initialize user data file if it doesn't exist

if not os.path.exists(USER_DATA_FILE):

with open(USER_DATA_FILE, 'w') as f:

json.dump([], f)

def load_user_data():

with open(USER_DATA_FILE, 'r') as f:

return json.load(f)

def save_user_data(data):

with open(USER_DATA_FILE, 'w') as f:

json.dump(data, f, indent=4)

def base64_to_image(base64_string):

try:

# Make sure we're getting the data part of the base64 string

if ',' in base64_string:

base64_string = base64_string.split(',')[1]

# Decode base64 to binary data

img_data = base64.b64decode(base64_string)

# Convert binary data to numpy array

nparr = np.frombuffer(img_data, np.uint8)

# Decode the numpy array as an image

img = cv2.imdecode(nparr, cv2.IMREAD_COLOR)

if img is None:

raise ValueError("Failed to decode image data")

return img

except Exception as e:

print(f"Error processing image: {str(e)}")

raise

def get_face_encoding(image):

if image is None:

return None

# Convert BGR to RGB (face_recognition expects RGB)

rgb_image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Check if conversion was successful

if rgb_image is None:

print("Color conversion failed")

return None

# Detect face locations

face_locations = face_recognition.face_locations(rgb_image)

if not face_locations:

print("No faces detected in the image")

return None

try:

# Get encoding of the first face found

face_encoding = face_recognition.face_encodings(rgb_image, face_locations)[0]

return face_encoding.tolist()

except Exception as e:

print(f"Error encoding face: {str(e)}")

return None

def save_image_file(image, filename):

try:

# Ensure the directory exists

os.makedirs('static/images', exist_ok=True)

# Save the image

success = cv2.imwrite(filename, image)

if not success:

raise ValueError("Failed to save image file")

return True

except Exception as e:

print(f"Error saving image: {str(e)}")

return False

def find_user_by_face(face_encoding):

users = load_user_data()

if not users:

return None

face_encoding = np.array(face_encoding)

for user in users:

stored_encoding = np.array(user['face_encoding'])

# Compare faces with a tolerance

distance = face_recognition.face_distance([stored_encoding], face_encoding)[0]

if distance < 0.6: # Adjust tolerance as needed

return user

return None

@app.route('/')

def index():

return render_template('index.html')

@app.route('/register')

def register():

return render_template('register.html')

@app.route('/verify')

def verify():

return render_template('verify.html')

@app.route('/api/register', methods=['POST'])

def api_register():

data = request.json

name = data.get('name')

image_data = data.get('image')

if not name or not image_data:

return jsonify({'success': False, 'message': 'Name and image are required'}), 400

try:

# Convert base64 to image

image = base64_to_image(image_data)

# Get face encoding

face_encoding = get_face_encoding(image)

if not face_encoding:

return jsonify({'success': False, 'message': 'No face detected in the image. Please try again with better lighting.'}), 400

# Save image file

timestamp = datetime.now().strftime('%Y%m%d%H%M%S')

image_filename = f"{name.replace(' ', '_')}_{timestamp}.jpg"

image_path = os.path.join('static/images', image_filename)

if not save_image_file(image, image_path):

return jsonify({'success': False, 'message': 'Failed to save image. Please try again.'}), 500

# Save user data

users = load_user_data()

user_data = {

'id': len(users) + 1,

'name': name,

'face_encoding': face_encoding,

'image_path': image_path,

'registered_at': datetime.now().isoformat()

}

users.append(user_data)

save_user_data(users)

return jsonify({

'success': True,

'message': f'User {name} registered successfully!',

'user': {

'id': user_data['id'],

'name': user_data['name'],

'image_path': user_data['image_path']

}

})

except Exception as e:

print(f"Registration error: {str(e)}")

return jsonify({'success': False, 'message': f'Error during registration: {str(e)}'}), 500

@app.route('/api/verify', methods=['POST'])

def api_verify():

data = request.json

image_data = data.get('image')

if not image_data:

return jsonify({'success': False, 'message': 'Image is required'}), 400

try:

# Convert base64 to image

image = base64_to_image(image_data)

# Get face encoding

face_encoding = get_face_encoding(image)

if not face_encoding:

return jsonify({'success': False, 'message': 'No face detected in the image. Please try again with better lighting.'}), 400

# Find matching user

user = find_user_by_face(face_encoding)

if user:

return jsonify({

'success': True,

'message': f'Welcome back, {user["name"]}!',

'user': {

'id': user['id'],

'name': user['name'],

'image_path': user['image_path']

}

})

else:

return jsonify({'success': False, 'message': 'User not recognized. Please register first.'}), 404

except Exception as e:

print(f"Verification error: {str(e)}")

return jsonify({'success': False, 'message': f'Error during verification: {str(e)}'}), 500

if __name__ == '__main__':

app.run(debug=True)The app.py file is the main part of our face recognition system. It runs a website using Flask and helps users register and verify their faces. Here’s how it works:

- Setting Up the Project:

- It checks if a folder called

static/imagesexists. If not, it creates it. This folder stores user images. - It also checks if a file called

user_data.jsonexists. If not, it creates an empty file. This file saves user information.

- It checks if a folder called

- Handling Images & Faces:

- When a user uploads a photo, the app converts it from base64 format (used in web uploads) into an image.

- It then detects faces in the image and extracts unique features (face encoding).

- If no face is found, the app tells the user to try again with better lighting.

- Website Pages:

/→ Shows the homepage./register→ Opens the face registration page./verify→ Opens the face verification page.

- How Registration Works:

- The user enters their name and uploads a photo.

- The app extracts the face features, saves the image, and stores the details in

user_data.json.

- How Verification Works:

- The user uploads a new photo for checking.

- The app extracts face features and compares them with stored data.

- If a match is found, it welcomes the user. Otherwise, it asks them to register first.

- Running the App:

- The file runs a Flask server, allowing users to access the website and interact with the system.

This is our main logic and core backend. Now, let’s add the frontend code, and the project will be ready for demo.

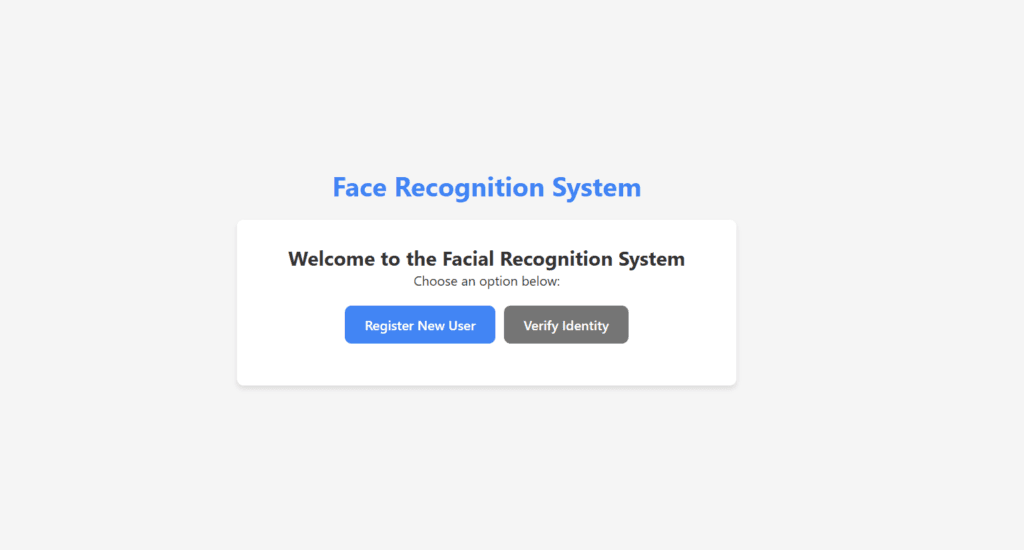

index.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Face Recognition App</title>

<link rel="stylesheet" href="{{ url_for('static', filename='styles.css') }}">

</head>

<body>

<div class="container">

<h1>Face Recognition System</h1>

<div class="card">

<h2>Welcome to the Facial Recognition System</h2>

<p>Choose an option below:</p>

<div class="button-group">

<a href="{{ url_for('register') }}" class="btn primary">Register New User</a>

<a href="{{ url_for('verify') }}" class="btn secondary">Verify Identity</a>

</div>

</div>

</div>

</body>

</html>register.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Register - Face Recognition App</title>

<link rel="stylesheet" href="{{ url_for('static', filename='styles.css') }}">

</head>

<body>

<div class="container">

<h1>User Registration</h1>

<div class="card">

<div id="camera-container">

<video id="video" autoplay playsinline></video>

<canvas id="canvas" style="display:none;"></canvas>

<div class="camera-overlay">

<div class="face-guide"></div>

</div>

</div>

<div id="preview-container" style="display:none;">

<img id="captured-image" src="" alt="Captured image">

</div>

<div class="form-group">

<label for="name">Your Name:</label>

<input type="text" id="name" placeholder="Enter your full name" required>

</div>

<div class="button-group">

<button id="capture-btn" class="btn primary" disabled>

<span class="loading-indicator" style="display:none;"></span>

Capture Photo

</button>

<button id="retake-btn" class="btn secondary" style="display:none;">Retake Photo</button>

<button id="register-btn" class="btn success" style="display:none;">Register</button>

</div>

<div id="status-message"></div>

<a href="{{ url_for('index') }}" class="back-link">← Back to Home</a>

</div>

</div>

<script>

document.addEventListener('DOMContentLoaded', function() {

const video = document.getElementById('video');

const canvas = document.getElementById('canvas');

const captureBtn = document.getElementById('capture-btn');

const loadingIndicator = captureBtn.querySelector('.loading-indicator');

const retakeBtn = document.getElementById('retake-btn');

const registerBtn = document.getElementById('register-btn');

const nameInput = document.getElementById('name');

const capturedImage = document.getElementById('captured-image');

const cameraContainer = document.getElementById('camera-container');

const previewContainer = document.getElementById('preview-container');

const statusMessage = document.getElementById('status-message');

let stream;

let capturedImageData = null;

// Start camera with improved settings

async function startCamera() {

try {

// Show loading indicator

loadingIndicator.style.display = 'inline-block';

statusMessage.innerHTML = '<div class="info">Initializing camera...</div>';

// Request high-quality video with specific constraints

stream = await navigator.mediaDevices.getUserMedia({

video: {

width: { ideal: 1280 },

height: { ideal: 720 },

facingMode: "user",

frameRate: { min: 15 }

}

});

video.srcObject = stream;

// Wait for video to be ready before enabling capture button

video.onloadedmetadata = function() {

// Hide loading indicator

loadingIndicator.style.display = 'none';

captureBtn.disabled = false;

statusMessage.innerHTML = '<div class="info">Camera ready! Position your face in the circle and click "Capture Photo"</div>';

};

// Handle potential playback errors

video.onerror = function(error) {

loadingIndicator.style.display = 'none';

statusMessage.innerHTML = `<div class="error">Video playback error: ${error.message}</div>`;

};

// Start video playback

video.play().catch(error => {

loadingIndicator.style.display = 'none';

statusMessage.innerHTML = `<div class="error">Video playback failed: ${error.message}</div>`;

});

} catch (err) {

loadingIndicator.style.display = 'none';

statusMessage.innerHTML = `<div class="error">Camera access denied: ${err.message}</div>`;

}

}

// Improved capture photo function

captureBtn.addEventListener('click', function() {

// Disable button to prevent multiple clicks

captureBtn.disabled = true;

// Set canvas dimensions to match video dimensions

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

const context = canvas.getContext('2d');

// Clear any previous content

context.clearRect(0, 0, canvas.width, canvas.height);

// Draw the current video frame to the canvas

context.drawImage(video, 0, 0, canvas.width, canvas.height);

// Get the image data with proper quality

capturedImageData = canvas.toDataURL('image/jpeg', 0.95);

// Display the captured image

capturedImage.src = capturedImageData;

capturedImage.onload = function() {

// Only show preview after image has loaded successfully

cameraContainer.style.display = 'none';

previewContainer.style.display = 'block';

captureBtn.style.display = 'none';

retakeBtn.style.display = 'inline-block';

registerBtn.style.display = 'inline-block';

};

capturedImage.onerror = function() {

statusMessage.innerHTML = '<div class="error">Failed to capture image. Please try again.</div>';

captureBtn.disabled = false;

};

// Stop the camera stream to save resources

if (stream) {

stream.getTracks().forEach(track => track.stop());

}

});

// Improved retake photo function

retakeBtn.addEventListener('click', function() {

cameraContainer.style.display = 'block';

previewContainer.style.display = 'none';

captureBtn.style.display = 'inline-block';

retakeBtn.style.display = 'none';

registerBtn.style.display = 'none';

statusMessage.innerHTML = '';

capturedImageData = null;

// Clear the captured image

capturedImage.src = '';

// Restart the camera

startCamera();

});

// Register user

registerBtn.addEventListener('click', async function() {

const name = nameInput.value.trim();

if (!name) {

statusMessage.innerHTML = '<div class="error">Please enter your name</div>';

return;

}

if (!capturedImageData) {

statusMessage.innerHTML = '<div class="error">Please capture your photo</div>';

return;

}

// Disable button to prevent multiple submissions

registerBtn.disabled = true;

retakeBtn.disabled = true;

statusMessage.innerHTML = '<div class="info">Processing registration...</div>';

try {

const response = await fetch('/api/register', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({

name: name,

image: capturedImageData

})

});

const result = await response.json();

if (result.success) {

statusMessage.innerHTML = `<div class="success">${result.message}</div>`;

setTimeout(() => {

window.location.href = '/';

}, 2000);

} else {

statusMessage.innerHTML = `<div class="error">${result.message}</div>`;

registerBtn.disabled = false;

retakeBtn.disabled = false;

}

} catch (err) {

statusMessage.innerHTML = `<div class="error">Registration failed: ${err.message}</div>`;

registerBtn.disabled = false;

retakeBtn.disabled = false;

}

});

// Initialize with disabled capture button until camera is ready

captureBtn.disabled = true;

startCamera();

});

</script>

</body>

</html>verify.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Verify - Face Recognition App</title>

<link rel="stylesheet" href="{{ url_for('static', filename='styles.css') }}">

</head>

<body>

<div class="container">

<h1>Identity Verification</h1>

<div class="card">

<div id="camera-container">

<video id="video" autoplay playsinline></video>

<canvas id="canvas" style="display:none;"></canvas>

<div class="camera-overlay">

<div class="face-guide"></div>

</div>

</div>

<div id="result-container" style="display:none;">

<div id="result-message"></div>

<div id="user-info" style="display:none;">

<img id="user-image" src="" alt="User image">

<h3 id="user-name"></h3>

</div>

</div>

<div class="button-group">

<button id="verify-btn" class="btn primary" disabled>

<span class="loading-indicator" style="display:none;"></span>

Verify Identity

</button>

<button id="retry-btn" class="btn secondary" style="display:none;">Try Again</button>

</div>

<a href="{{ url_for('index') }}" class="back-link">← Back to Home</a>

</div>

</div>

<script>

document.addEventListener('DOMContentLoaded', function() {

const video = document.getElementById('video');

const canvas = document.getElementById('canvas');

const verifyBtn = document.getElementById('verify-btn');

const loadingIndicator = verifyBtn.querySelector('.loading-indicator');

const retryBtn = document.getElementById('retry-btn');

const cameraContainer = document.getElementById('camera-container');

const resultContainer = document.getElementById('result-container');

const resultMessage = document.getElementById('result-message');

const userInfo = document.getElementById('user-info');

const userImage = document.getElementById('user-image');

const userName = document.getElementById('user-name');

let stream;

// Start camera with improved settings

async function startCamera() {

try {

// Show loading indicator

loadingIndicator.style.display = 'inline-block';

resultMessage.innerHTML = '<div class="info">Initializing camera...</div>';

resultContainer.style.display = 'block';

// Request high-quality video with specific constraints

stream = await navigator.mediaDevices.getUserMedia({

video: {

width: { ideal: 1280 },

height: { ideal: 720 },

facingMode: "user",

frameRate: { min: 15 }

}

});

video.srcObject = stream;

// Wait for video to be ready before enabling verify button

video.onloadedmetadata = function() {

// Hide loading indicator

loadingIndicator.style.display = 'none';

verifyBtn.disabled = false;

resultMessage.innerHTML = '<div class="info">Camera ready! Position your face in the circle and click "Verify Identity"</div>';

};

// Handle potential playback errors

video.onerror = function(error) {

loadingIndicator.style.display = 'none';

resultMessage.innerHTML = `<div class="error">Video playback error: ${error.message}</div>`;

};

// Start video playback

video.play().catch(error => {

loadingIndicator.style.display = 'none';

resultMessage.innerHTML = `<div class="error">Video playback failed: ${error.message}</div>`;

});

} catch (err) {

loadingIndicator.style.display = 'none';

resultMessage.innerHTML = `<div class="error">Camera access denied: ${err.message}</div>`;

resultContainer.style.display = 'block';

cameraContainer.style.display = 'none';

}

}

// Improved verify identity function

verifyBtn.addEventListener('click', async function() {

// Disable button to prevent multiple clicks

verifyBtn.disabled = true;

loadingIndicator.style.display = 'inline-block';

// Set canvas dimensions to match video dimensions

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

const context = canvas.getContext('2d');

// Clear any previous content

context.clearRect(0, 0, canvas.width, canvas.height);

// Draw the current video frame to the canvas

context.drawImage(video, 0, 0, canvas.width, canvas.height);

// Get the image data with proper quality

const imageData = canvas.toDataURL('image/jpeg', 0.95);

resultMessage.innerHTML = '<div class="info">Verifying your identity...</div>';

resultContainer.style.display = 'block';

try {

const response = await fetch('/api/verify', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({

image: imageData

})

});

const result = await response.json();

// Hide loading indicator

loadingIndicator.style.display = 'none';

if (result.success) {

// Stop the camera stream

if (stream) {

stream.getTracks().forEach(track => track.stop());

}

cameraContainer.style.display = 'none';

verifyBtn.style.display = 'none';

retryBtn.style.display = 'inline-block';

resultMessage.innerHTML = `<div class="success">${result.message}</div>`;

userImage.src = `/${result.user.image_path}`;

// Make sure image loads properly

userImage.onload = function() {

userName.textContent = result.user.name;

userInfo.style.display = 'flex';

};

userImage.onerror = function() {

resultMessage.innerHTML += '<div class="error">Failed to load user image.</div>';

};

} else {

resultMessage.innerHTML = `<div class="error">${result.message}</div>`;

retryBtn.style.display = 'inline-block';

verifyBtn.style.display = 'none';

}

} catch (err) {

loadingIndicator.style.display = 'none';

resultMessage.innerHTML = `<div class="error">Verification failed: ${err.message}</div>`;

retryBtn.style.display = 'inline-block';

verifyBtn.style.display = 'none';

}

});

// Improved retry function

retryBtn.addEventListener('click', function() {

cameraContainer.style.display = 'block';

userInfo.style.display = 'none';

verifyBtn.style.display = 'inline-block';

retryBtn.style.display = 'none';

// Restart the camera

startCamera();

});

// Initialize with disabled verify button until camera is ready

verifyBtn.disabled = true;

startCamera();

});

</script>

</body>

</html>styles.css

/* static/styles.css */

:root {

--primary-color: #4285f4;

--secondary-color: #34a853;

--accent-color: #ea4335;

--background-color: #f5f5f5;

--card-color: #ffffff;

--text-color: #333333;

--border-radius: 8px;

--box-shadow: 0 4px 6px rgba(0, 0, 0, 0.1);

}

* {

margin: 0;

padding: 0;

box-sizing: border-box;

font-family: 'Segoe UI', Tahoma, Geneva, Verdana, sans-serif;

}

body {

background-color: var(--background-color);

color: var(--text-color);

min-height: 100vh;

display: flex;

justify-content: center;

align-items: center;

padding: 20px;

}

.container {

width: 100%;

max-width: 600px;

text-align: center;

}

h1 {

margin-bottom: 20px;

color: var(--primary-color);

}

.card {

background-color: var(--card-color);

border-radius: var(--border-radius);

box-shadow: var(--box-shadow);

padding: 30px;

margin-bottom: 20px;

}

.form-group {

margin-bottom: 20px;

text-align: left;

}

label {

display: block;

margin-bottom: 5px;

font-weight: 600;

}

input[type="text"] {

width: 100%;

padding: 12px;

border: 1px solid #ddd;

border-radius: var(--border-radius);

font-size: 16px;

transition: border-color 0.3s;

}

input[type="text"]:focus {

border-color: var(--primary-color);

outline: none;

}

.button-group {

display: flex;

justify-content: center;

gap: 10px;

margin: 20px 0;

}

.btn {

padding: 12px 24px;

border: none;

border-radius: var(--border-radius);

font-size: 16px;

font-weight: 600;

cursor: pointer;

transition: background-color 0.3s, transform 0.2s;

text-decoration: none;

display: inline-block;

}

.btn:hover {

transform: translateY(-2px);

}

.btn:active {

transform: translateY(0);

}

.primary {

background-color: var(--primary-color);

color: white;

}

.primary:hover {

background-color: #3b78e7;

}

.secondary {

background-color: #757575;

color: white;

}

.secondary:hover {

background-color: #656565;

}

.success {

background-color: var(--secondary-color);

color: white;

}

.success:hover {

background-color: #2d9548;

}

/* Improved camera container styling */

#camera-container {

position: relative;

margin-bottom: 20px;

border-radius: var(--border-radius);

overflow: hidden;

background-color: #000;

width: 100%;

height: 0;

padding-bottom: 75%; /* 4:3 aspect ratio */

}

/* Fix video element to prevent flickering */

#video {

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

object-fit: cover;

transform: scaleX(-1); /* Mirror effect */

background-color: #000;

}

.camera-overlay {

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

display: flex;

justify-content: center;

align-items: center;

pointer-events: none;

}

/* Smoother face guide */

.face-guide {

width: 200px;

height: 200px;

border: 3px dashed rgba(255, 255, 255, 0.7);

border-radius: 50%;

box-shadow: 0 0 0 2000px rgba(0, 0, 0, 0.3);

}

/* Better preview container */

#preview-container {

margin: 20px auto;

max-width: 320px;

border-radius: var(--border-radius);

overflow: hidden;

background-color: #f0f0f0;

}

/* Improve captured image display */

#captured-image {

display: block;

width: 100%;

height: auto;

transform: scaleX(-1); /* Mirror effect to match video */

border-radius: var(--border-radius);

}

#status-message {

min-height: 50px;

}

.error, .success, .info {

padding: 10px 15px;

border-radius: var(--border-radius);

margin: 10px 0;

text-align: left;

}

.error {

background-color: rgba(234, 67, 53, 0.1);

color: #d32f2f;

border-left: 4px solid #d32f2f;

}

.success {

background-color: rgba(52, 168, 83, 0.1);

color: #388e3c;

border-left: 4px solid #388e3c;

}

.info {

background-color: rgba(66, 133, 244, 0.1);

color: #1976d2;

border-left: 4px solid #1976d2;

}

.back-link {

display: inline-block;

margin-top: 10px;

color: var(--primary-color);

text-decoration: none;

}

.back-link:hover {

text-decoration: underline;

}

#user-info {

display: flex;

flex-direction: column;

align-items: center;

margin: 20px 0;

}

#user-image {

width: 150px;

}After placing all the files in their proper locations, run app.py using the command below. This will start the project at http://127.0.0.1:5000/.

Output

This is all about how we created a Face Recognition System using Python. I hope this project adds extra value to your resume. Thank you for reading this article—see you in the next one!